The Context

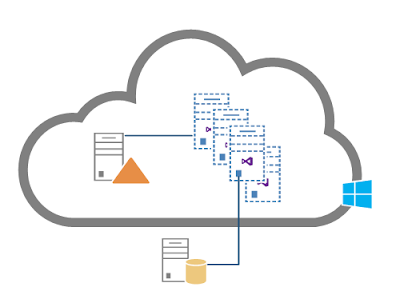

The website was a Visual Studio 2010 project using Azure SDK 1.6 and a library call AspNetProvider that was part of Microsoft's sample few years ago to manage session and membership. Using the AspNetProvider library the session was saved in Azure blob storage, and the membership was saved in an SQL database.The Goal

The application must stay a Visual Studio 2010 project, but using the most-recent Azure SDK and Azure Storage Client as possible.The Solution

- Azure SDK 2.1

- Azure.StorageClient 4.0

- Universal Provider version 2.1

- OS famille 4

The Journey

Migration from SDK 1.6 to SDK 2.1

Migration from AspNetProvider to UniversalProvider

Install-Package UniversalProvider -version 1.2Check the web.config file to clean the membership connections.

Migration of the Azure Storage Client

Install-Package Azure.Storage.ClientMigration of the membership data

-- ========================================================

-- Description: Migrate data from asp_* tables

-- to the new table used by Universal provider

-- ========================================================

DECLARE @CNT_NewTable AS INT

DECLARE @CNT_OldTable AS INT

-- --------------------------------------------------------

-- Applications -------------------------------------------

INSERT INTO dbo.Applications (ApplicationName, ApplicationId, Description)

SELECT n.ApplicationName, n.ApplicationId, n.Description

FROM dbo.aspnet_Applications o

LEFT JOIN dbo.Applications n ON o.ApplicationId = n.ApplicationId

WHERE n.ApplicationId IS NULL

SELECT @CNT_NewTable = Count(1) from dbo.Applications

SELECT @CNT_OldTable = Count(1) from aspnet_Applications

PRINT 'Application Count: ' + CAST(@CNT_NewTable AS VARCHAR) + ' = ' + CAST(@CNT_OldTable AS VARCHAR)

-- --------------------------------------------------------

-- Roles --------------------------------------------------

INSERT INTO dbo.Roles (ApplicationId, RoleId, RoleName, Description)

SELECT o.ApplicationId, o.RoleId, o.RoleName, o.Description

FROM dbo.aspnet_Roles o

LEFT JOIN dbo.Roles n ON o.RoleId = n.RoleId

WHERE n.RoleId IS NULL

SELECT @CNT_NewTable = Count(1) from dbo.Roles

SELECT @CNT_OldTable = Count(1) from aspnet_Roles

PRINT 'Roles Count : ' + CAST(@CNT_NewTable AS VARCHAR) + ' = ' + CAST(@CNT_OldTable AS VARCHAR)

-- --------------------------------------------------------

-- Users --------------------------------------------------

INSERT INTO dbo.Users (ApplicationId, UserId, UserName, IsAnonymous, LastActivityDate)

SELECT o.ApplicationId, o.UserId, o.UserName, o.IsAnonymous, o.LastActivityDate

FROM dbo.aspnet_Users o LEFT JOIN dbo.Users n ON o.UserId = n.UserID

WHERE n.UserID IS NULL

SELECT @CNT_NewTable = Count(1) from dbo.Users

SELECT @CNT_OldTable = Count(1) from aspnet_Users

PRINT 'Users count: ' + CAST(@CNT_NewTable AS VARCHAR) + ' >= ' + CAST(@CNT_OldTable AS VARCHAR)

-- --------------------------------------------------------

-- Memberships --------------------------------------------

INSERT INTO dbo.Memberships (ApplicationId, UserId, Password,

PasswordFormat, PasswordSalt, Email, PasswordQuestion, PasswordAnswer,

IsApproved, IsLockedOut, CreateDate, LastLoginDate, LastPasswordChangedDate,

LastLockoutDate, FailedPasswordAttemptCount,

FailedPasswordAttemptWindowStart, FailedPasswordAnswerAttemptCount,

FailedPasswordAnswerAttemptWindowsStart, Comment)

SELECT o.ApplicationId, o.UserId, o.Password,

o.PasswordFormat, o.PasswordSalt, o.Email, o.PasswordQuestion, o.PasswordAnswer,

o.IsApproved, o.IsLockedOut, o.CreateDate, o.LastLoginDate, o.LastPasswordChangedDate,

o.LastLockoutDate, o.FailedPasswordAttemptCount,

o.FailedPasswordAttemptWindowStart, o.FailedPasswordAnswerAttemptCount,

o.FailedPasswordAnswerAttemptWindowStart, o.Comment

FROM dbo.aspnet_Membership o

LEFT JOIN Memberships n ON o.ApplicationId = n.ApplicationId

AND o.UserId = n.UserId

WHERE n.UserId IS NULL AND n.ApplicationId IS NULL

SELECT @CNT_NewTable = Count(1) from dbo.Memberships

SELECT @CNT_OldTable = Count(1) from aspnet_Membership

PRINT 'Memberships count: ' + CAST(@CNT_NewTable AS VARCHAR) + ' >= ' + CAST(@CNT_OldTable AS VARCHAR)

-- -------------------------------------------------------

-- UsersInRoles ------------------------------------------

TRUNCATE TABLE dbo.UsersInRoles

INSERT INTO dbo.UsersInRoles SELECT * FROM dbo.aspnet_UsersInRoles

SELECT @CNT_NewTable = Count(1) from dbo.UsersInRoles

SELECT @CNT_OldTable = Count(1) from aspnet_UsersInRoles

PRINT 'UsersInRoles count: ' + CAST(@CNT_NewTable AS VARCHAR) + ' >= ' + CAST(@CNT_OldTable AS VARCHAR)Migration from OSFamilly 1 to 4

Open the file .cscfg and edit the OS Family attribute. It's in the ServiceConfiguration node.<ServiceConfiguration servicename="MyApp" osFamily="4" osVersion="*" ...> Wrapping up

The only step left is to deploy in the staging environment to see if everything is working as expected. would recommend also to plan to upgrade as soon as possible because the Azure SDK 2.1 official retirement date is November 2015. I hope this post could help you, even if you are migrating from and to a different version. Any comments, suggestions and/or questions are welcome.~ Frank Boucher

![clip_image001[4] clip_image001[4]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEiyWQ10soVKrp9ANqYuY3AneSXId9yRq2eOAYYXhSraXSMz18HNDB_HORSkCotDlPOI7pIGO4nHidTmeGR4qCFs4GJ8WR5C5ZZjiH6j76xqxkcVbAaRs8Mk6tUkUSCdKjGU2P2faazqCOc/?imgmax=800)

![clip_image002[4] clip_image002[4]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEjSvfAmShSxTiJPSI6QqBlV76JsLYSxxLMxi-9AZnDCYMN14nT4a555e2hxv3Livq-fD7uXZ52wnVvU2Oks626MSvQw1pOxLSgFk8pVxgvD-70CZ_rrL3CNK8Aag8aGLB3ZYzUJiMUNzug/?imgmax=800)