ClickOnce

The easy way to deploy the .Net applications

- Introduction

- The Application

- Deployment of an application

- Installation

- Update

- Uninstalling the Application

- Examining the Application and Deployment Manifests

- The Application Manifest

- The Deployment Manifest

- Security

- Sign the ClickOnce manifest

- Security permissions

- Faqs

- Extra

- Current Version

- Update Detail

- Updating manually

- Downloading Files on Demand

- References

Introduction

ClickOnce is a new application deployment technology that makes deploying a Windows Forms based application as easy as deploying a web application.

ClickOnce applications can be deployed via web servers, file servers or CDs. A ClickOnce application can choose to be installed, meaning it gets start menu & add/remove program entries, or an app can simply be run and cached. ClickOnce can be configured in several ways to check automatically for application updates. Alternatively, applications can use the ClickOnce APIs (System.Deployment), to control when updates should happen.

Using ClickOnce requires that the target client already have the .NET Framework 2.0 installed. Visual Studio has made packaging and deploying the .NET Framework simpler than ever. Simply select what pre-requisites your application may have (e.g., the .NET Framework 2.0 and MDAC 9.0) and Visual Studio will generate a bootstrapper file that will automatically install all of the specified prerequisites when run. On the server side, ClickOnce needs only an HTTP 1.1 server or alternatively a file server.

So let’s start and build a simple application…

The Application

Create a simple windows base application named MyClickTest. Just put a label on the Form1 and set the text property equal to: “Version 1”. That all for the first version of our application.

Deployment of an application

First deployment

To start the publishing configuration you must go in the property of the project. You can right-click on the Project name [MyClickTest] in the Solution Explorer at the top right of the screen or in Menu | Project | MyClickTest Properties. Than in the tab Signing check the [Sign the ClickOnce manifest].

We must now define the Security level. For the moment, check the box which indicates that our application is "Full trust". By safety measure, it will be necessary to better avoid choosing this option; we will thus see a little later in this tutorial how to define these options of safety as well as possible.

Let go now to the greatest part: publication. In the Publish tab you can define the location of you’re publication. This location will specify if the application will by publish from a web site, a ftp site or by a share folder of a network.

In some scenarios, it may be important to first publish the application to a staging server before moving it to another server for deployment. While the Publishing Location text box specifies the location to which you'll publish the application, the Installation URL text box allows you to specify where the users should install your application. As an example, consider the following scenario:

When you publish the application using the above settings, the application will deploy to

http://server1/ MyClickTest/. However, you will need to manually copy all of the files located in the folder mapped to

http://server1/MyApp/ into the folder mapped to

http://server2/MyClickTest/.

Users will then install the application from

http://server2/MyClickTest/ (that is, through

http://server2/ MyClickTest/index.htm). More importantly, the application will check for updates from

http://server2/MyClickTest/ and not

http://server1/MyClickTest/.

Also you define the installation mode. Does the application will be available online only (download every time) or the application can by available online and in offline mode, so install locally and available in the Start menu. Other configurations are available:

· Application Files: Here are listed all files associated with the projects. (

Figure 1)

Figure 1

· Prerequisites: Here you can specify the list of the applications must by install to run you’re application (

Figure 2). The bootstrapper is very extensible and can be used to install 3rd party and custom pre-requisites.

Figure 2

To add our own prerequisite you need to have bootstrapper package. Once created boot strapper package then it will automatically included into prerequisite list. To generate bootstrapper can use “Bootstrapper Manifest Generator” tool. Follow the steps given below to add own bootstrapper using “Bootstrapper Manifest Generator” tool.(

http://www.gotdotnet.com/workspaces/workspace.aspx?id=ddb4f08c-7d7c-4f44-a009-ea19fc812545)

- Updates: Here you specify if the application will check for update on the server, when it will do (before or after the application start). (Figure 3). If you check for updates after the application has started, you can specify how often to check. The most frequent check you can perform is once per day.

Figure 3

To prevent users from running an older version of your application, you can specify the minimum version required. This option is useful if you have just discovered a major bug in your application; to prevent users from rolling back to the previous version after the update, you can set the current version number as the minimum required version.

By default, your application will check for updates from locations in the following order:

1. Update location (if specified in this window).

2. Installation URL (if specified in the Publish tab of the project properties).

3. Publish location.

- Options: You can specify various information (Figure 4).

Figure 4

Once all information is completed click the button "Publish Wizard" (also available in menu [Build] or, in the [Solution Explorer], by right clicking) that will start the Installation Wizard to configure the ClickOnce installation.

The

Publish Wizard enables you to configure all the options necessary to the deployment of the application.

Again you can specify the location of the publication…

… the installation mode ( online or offline)…

Because all the information is already specified in the previous screen press the [Finish] button will be the same.

By clicking the button a new web page is automatically create to publish the application. You can see the page by tipping the web address specified previously (

http://localhost/MyClickTest/index.htm)

Installation

To install the application just click the [Install] button. That will launch the installation of the application on the station and one sees well the checking of the presence of update, right before launching:

Now you’re application is available in the Start menu.

Update

Now get back to Visual Studio and charge the label to Version 2. In the Build menu (or, in the [Solution Explorer], by right clicking) re-publish the application. From the Start menu launch the application. A popup should be display asking you to update the version on you is local machine.

Now you should see Version 2 in the label.

Uninstalling the Application

To uninstall a ClickOnce application, users can go to the Control Panel and launch the "Add or Remove Programs" application. In the "Change or Remove Programs" section, users then select the application to uninstall and click the Change/Remove button.

If the application has been updated at least once, users will see the option "Restore the application to its previous state," which allows users to roll back the application to its previous version. Users then select the option "Remove the application from this computer" to uninstall the application.

Examining the Application and Deployment Manifests

When you use the Publish Wizard in ClickOnce, Visual Studio will publish your application to the URL you have indicated. For example, if you indicated

http://localhost/MyClickTest/ as the publishing directory and you mapped the virtual directory MyClickTest to the local path C:\Inetpub\wwwroot\MyClickTest, two types of files will be created under the C:\Inetpub\wwwroot\MyClickTest directory:

· The application manifest

· The deployment manifest

The Application Manifest

When you publish your application, a folder and four files are automatically generated in the publishing directory. They are:

· A folder containing the deployment files (MyClickTest_1_0_0_0; see next section).

· An application manifest MyClickTest.application. The index.htm file points to this application (by default is publish.htm).

· A version-specific application manifest; for example, MyClickTest_1_0_0_0.application.

· A index.htm (publish.htm) web page containing instructions on how to install the application.

· A setup application (setup.exe).

The application manifest MyClickTest.application is an XML file that contains detailed information about the current application as well as its version number. It lets users know if they need to update application.

When you re-publish your application, the contents of MyClickTest.application, publish.htm, and setup.exe will be modified, while one new version-specific application manifest (for example, MyClickTest_1_0_0_1.application) and a new folder (for example, MyClickTest_1_0_0_1) containing the new versions of deployment files will be created.

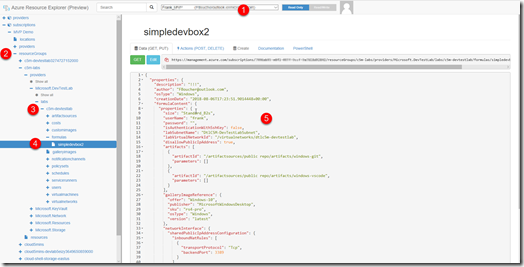

The Deployment Manifest

Locate the deployment manifest, MyClickTest.exe.manifest, in the C:\Inetpub\wwwroot\MyClickTest\MyClickTest _1_0_0_0 directory. It contains information about the application (such as dependencies and attached files). The MyClickTest.exe.deploy file is your application's executable. The other files and databases in the directory are used by your application. During installation, these files will be downloaded onto your users' machines.

Security

Sign the ClickOnce manifests

You have the possibility of signing the manifest your ClickOnce application, like signing your assembly NET.

With Visual Studio 2005, you don’t need anymore to use command prompt tool. In the tab [Signing] (of the page of properties of your project) just check the box [Sign the assembly] and via the drop-down menu, pick a option to create a new file “snk”.

You also have the possibility of signing the manifest your ClickOnce application, by using a certificate of safety. Use a certificate already installed (button [Select from Blind]), or import your certificate (button [Select from File]).

Security permissions

A good safety is that which gives less possible freedoms, more precisely, that which gives simply the rights necessary. You can do it manually by choosing rights which you grant to your application or then, you can let Visual Studio do that for you.

Click on [Calculating the permissions]

Visual Studio then will analyze each line of your code in order to detect (according to your methods) the authorizations needed.

Faqs

Does "ClickOnce" download the entire application every-time I update it?

No. “ClickOnce” only downloads the files and assemblies that have changed.

I want my application to be installed to a specific hard drive location, how can I achieve this?

The install location of "ClickOnce" application cannot be managed by the application. This is an important part of making "ClickOnce" applications safe, reversible, and easy to administer.

Can I use compression to make my application download faster?

Yes, “ClickOnce” supports HTTP 1.1 compression. Simply enable this on your web server & the files “ClickOnce” downloads will be compressed.

Can I install a "ClickOnce" application per-machine?

No. All “ClickOnce” applications are installed per-user. Each user is totally isolated from one another and must install their own copy. If your application needs to be installed per-machine, you should use MSI.

Extra

A lot of functionality are available by the System.Deployment.Application reference.

Let present some of those. First see the interface.

Current Version

In the Form_load of the form let retrieve the information about the current version.

[code:c#;ln=on]

private void Form1_Load( object sender, EventArgs e){

ApplicationDeployment oDeploy;

// Check if the application is deploy by the ClickOnce chkClickDeploy.Checked = ApplicationDeployment.IsNetworkDeployed;

if (ApplicationDeployment.IsNetworkDeployed) {

// Because the application is a CLickOnce deployement there are some information

// there are available lke the current version, the Location of this version update.

oDeploy = ApplicationDeployment.CurrentDeployment;

txtCurrentVersion.Text = oDeploy.CurrentVersion.ToString();

txtCurrentServer.Text = oDeploy.UpdateLocation.ToString();

}

this.txtinstallFolder.Text = Environment.CurrentDirectory;

}[/code]

Update Detail

By clicking the button [Check Now] let check if a new release is available, and if yes then retrieve the information about that one.

[code:c#;ln=on]

private void btnCheck_Click(object sender, EventArgs e)

{

ApplicationDeployment oDeploy;

if (ApplicationDeployment.IsNetworkDeployed)

{

oDeploy = ApplicationDeployment.CurrentDeployment;

// Check if a newest version is available.

UpdateCheckInfo oInfoChecker = oDeploy.CheckForDetailedUpdate();

if (oInfoChecker.UpdateAvailable)

{

// If yes then retreive the latest version available

// (because you can jump a version). check if the version

// in mandatory and the size.

chkUpdateAvailable.Checked = oInfoChecker.UpdateAvailable;

txtLastVersion.Text = oInfoChecker.AvailableVersion.ToString();

chkUpdateMandatory.Checked = oInfoChecker.IsUpdateRequired;

txtLastVersionServer.Text = oDeploy.UpdateLocation.ToString();

txtUpdateSize.Text = oInfoChecker.UpdateSizeBytes.ToString();

}

else {

MessageBox.Show("No update available...");

}

}

}[/code]

Updating manually

By clicking the button [Update] the application will update to the latest version available. We can do it asynchronally or synchronally. The new version will take effect after to re-start the application.

[code:c#;ln=on]

private void btnUpdate_Click(object sender, EventArgs e)

{

ApplicationDeployment oDeploy;

if (ApplicationDeployment.IsNetworkDeployed)

{

oDeploy = ApplicationDeployment.CurrentDeployment;

UpdateCheckInfo oInfoChecker = oDeploy.CheckForDetailedUpdate();

if (oInfoChecker.UpdateAvailable)

{

if (optSynch.Checked){

oDeploy.Update();

MessageBox.Show("Update done successfully...");

}

else {

oDeploy.UpdateCompleted += new AsyncCompletedEventHandler(oDeploy_UpdateCompleted);

oDeploy.UpdateProgressChanged += new DeploymentProgressChangedEventHandler(oDeploy_UpdateProgressChanged);

oDeploy.UpdateAsync();

}

}

}

}

void oDeploy_UpdateProgressChanged(object sender, DeploymentProgressChangedEventArgs e)

{

this.toolStripProgressBar1.Value = e.ProgressPercentage;

}

void oDeploy_UpdateCompleted(object sender, AsyncCompletedEventArgs e)

{

MessageBox.Show("Update done successfully...");

}

private void btnReStart_Click(object sender, EventArgs e)

{

Application.Restart();

}

[/code]

Downloading Files on Demand

If you have a large-size help file, this adds to the download time for your users. And if you have multiple help files in your application, then the entire application will also take longer to install. A better approach is to selectively download the help files as and when you need them. For example, you can set the help file to download after the application installs, when a user clicks on the Display Help button.

To specify that the help file be loaded as and when needed, you need to perform the following steps:

· In Visual Studio in the [Solution Explorer] add a text File: ClickHelp.txt.

· Get back in the Property of the Project in the tab [Publish]. Click on the button [Application Files]. Change the Publish Status of ClickHelp.txt to

Include.

· Click the drop-down menu in the Download Group and select (New).

· Specify a name for the download group, such as: Help.

· Add a new button on the form. Then double-click on it to add this handler code.

[code:c#;ln=on]

private void button1_Click(object sender, EventArgs e)

{

ApplicationDeployment oDeploy;

// Check if the application is deploy by the ClickOnce

if(ApplicationDeployment.IsNetworkDeployed){

oDeploy = ApplicationDeployment.CurrentDeployment;

// Add a handler for the asynchrone process and download the file(s)

// in the group “Help”

oDeploy.DownloadFileGroupCompleted += new

DownloadFileGroupCompletedEventHandler(

oDeploy_DownloadFileGroupCompleted);

oDeploy.DownloadFileGroupAsync("Help");

}

}

void oDeploy_DownloadFileGroupCompleted(object sender,

DownloadFileGroupCompletedEventArgs e){

// If the Group is Help display the content of the file.

if(e.Group.Equals("Help")){

MessageBox.Show(File.ReadAllText("ClickHelp.txt"));

}

}

[/code]

References

- OReilly Use ClickOnce to Deploy Windows Applications (2006).chm